Mindfest Virtual Poster

A "virtual poster" to go with my Mindfest poster

Overall Idea

I have developed an AI-powered cognitive co-pilot to accelerate the speed at which neuroscience researchers develop robust theories.

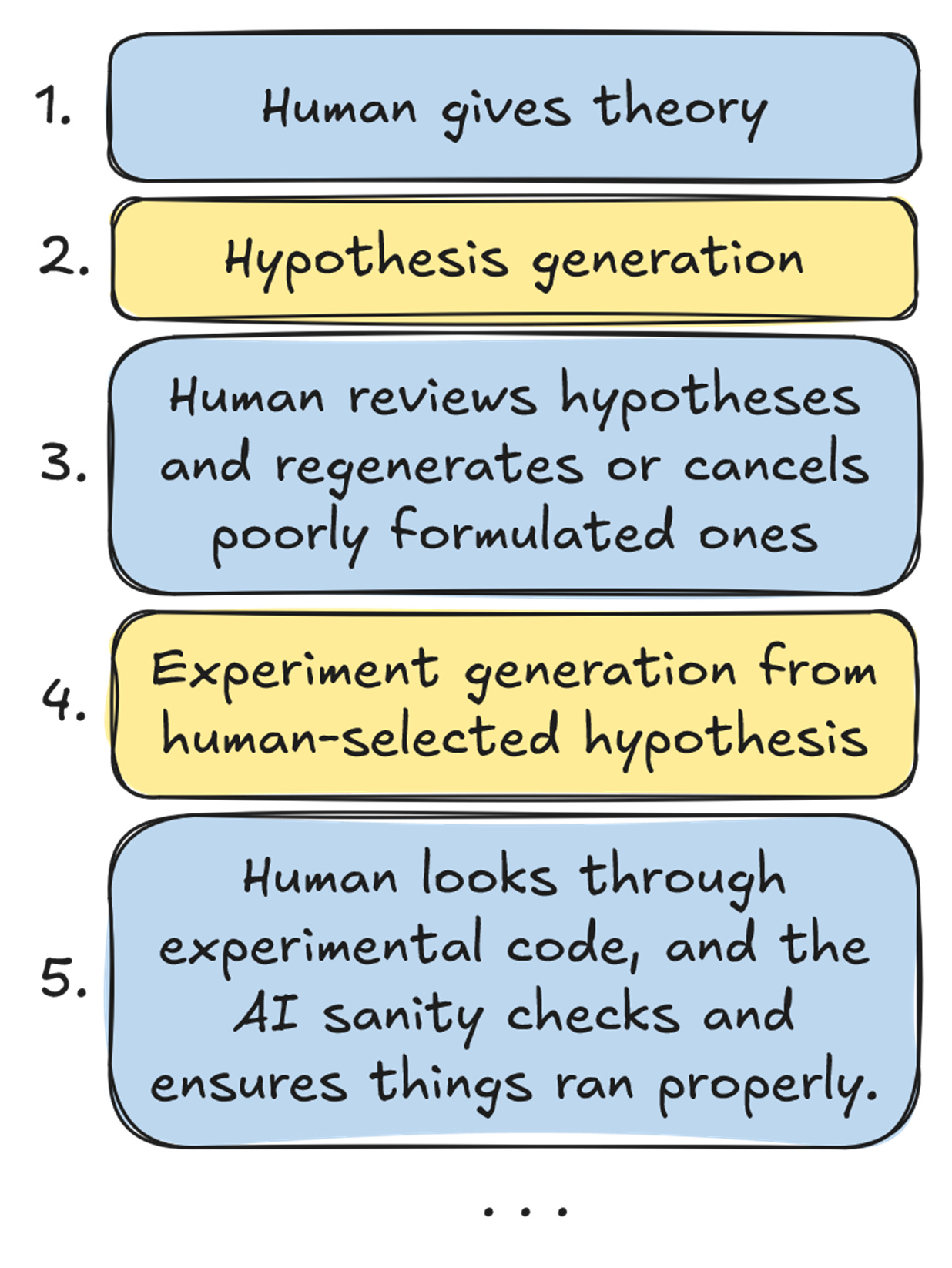

The human comes up with a theory. The AI system comes up with falsifiable hypotheses in natural language. The AI then codes up some experiments it can perform on a computational dataset based on the hypotheses. Finally the AI provides the results of that experiment to a human in a way that they can interpret and use to refine their theory.

Why does this matter?

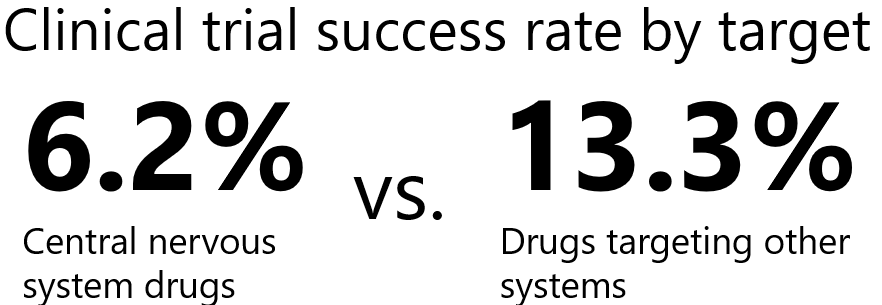

According to this study drugs that target the central nervous system only succeed half as often compared to drugs that target other systems. This is a wider pattern across clinical science, clinical interventions that target the brain are much less likely to succeed. The authors of this paper (and me) make the point that one of the primary causes for the failure rates of clinical brain science is the fact that our hypotheses about the brain aren’t very robust or high-quality compared to our hypotheses about other systems in the body.

How well does this system actually work?

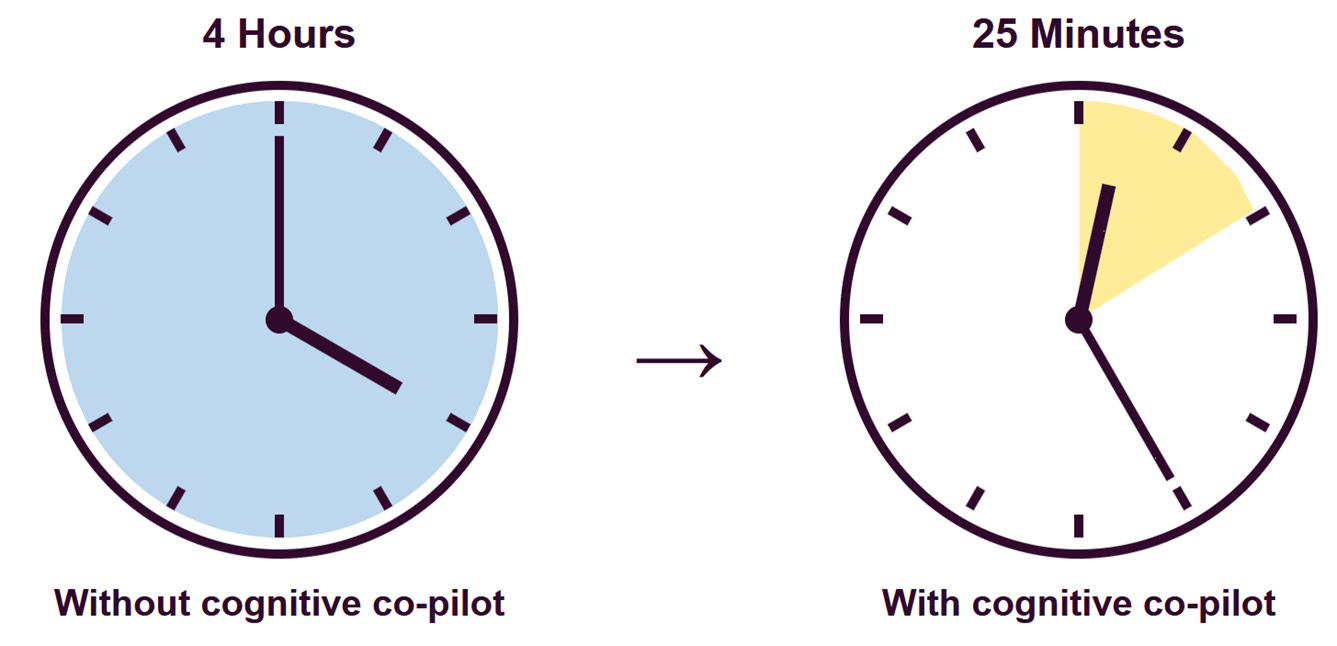

It works really well! In early tests computational neuroscientists are able to do theory refinement that would take 4 hours using standard methods in 25 minutes with assistance from the cognitive co-pilot.

How does the system actually work?

The cognitive co-pilot is actually many smaller and dumber AI’s in a trenchcoat. The architecture is based around “mini-experts.” A mini-expert is an AI that uses RAG and knowledge distillation to have expertise on a very specific methodology and how you implement it and interpret results (usually a mini-expert has around 15 papers as its’ background information). This architecture allows the mini-experts to collaborate to form many hypothesis and many experiments for a single theory. The architecture is also highly modular, meaninng that it will be easy for computational neuroscientists to program their own “mini-expert” for doing experiments that use a specific method.

What about safety/explainability?

I am using a framework that comes from Google Deepmind called MONA (Myopic Optimization with Non-myopic Approval). It means that a human is supervising the process instead of just the final outcome. For my system this means that at every single research step a human expert views what has been generated and either validates that the AI is doing things properly, or can select that the system might not be doing things correctly in some way. Allowing the human to act as a "checker” as opposed to the one generating the hypotheses and experimental code is what contributes to the speed up we are seeing from early results.